Aqui como eu fiz no notebook Jupyter:

1. Baixe jars da central ou de qualquer outro repositório e coloque-os no diretório chamado "jars":

mongo-spark-connector_2.11-2.4.0

mongo-java-driver-3.9.0

2. Criar sessão e gravar/ler quaisquer dados

from pyspark import SparkConf

from pyspark.sql import SparkSession

from pyspark.sql.functions import *

from pyspark.sql.types import *

working_directory = 'jars/*'

my_spark = SparkSession \

.builder \

.appName("myApp") \

.config("spark.mongodb.input.uri=mongodb://127.0.0.1/test.myCollection") \

.config("spark.mongodb.output.uri=mongodb://127.0.0.1/test.myCollection") \

.config('spark.driver.extraClassPath', working_directory) \

.getOrCreate()

people = my_spark.createDataFrame([("JULIA", 50), ("Gandalf", 1000), ("Thorin", 195), ("Balin", 178), ("Kili", 77),

("Dwalin", 169), ("Oin", 167), ("Gloin", 158), ("Fili", 82), ("Bombur", 22)], ["name", "age"])

people.write.format("com.mongodb.spark.sql.DefaultSource").mode("append").save()

df = my_spark.read.format("com.mongodb.spark.sql.DefaultSource").load()

df.select('*').where(col("name") == "JULIA").show()

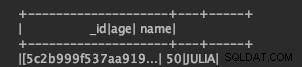

Como resultado, você verá isto: